SEO Case Study: How to Increase Position in Google Search

Nowadays, it’s no secret that behavioral factors play a significant role in search rankings. Google pays close attention to user experience signals such as CTR, bounce rate, time spent on a page, and return rate.

If you want your website not only to reach the top of Google search but also to maintain its position for a long time, you need to continuously monitor these metrics and work on improving them.

This raises the question: How can behavioral factors be improved, and can they be enhanced artificially?

We delved deeper into this topic and found several guides and successful case studies where webmasters shared their experiences with manipulating behavioral factors.

A closer examination of the issue revealed that manipulating behavioral factors can be used both to improve and to lower rankings. In highly competitive niches, competitors may send bot traffic to your site to degrade its behavioral metrics and push it down in search results.

The most commonly used tools for manipulating behavioral factors are ZennoPoster and BAS. These are software programs that allow you to create full-fledged applications using a browser, automating all of your actions.

SEO EXPERIMENT: Test whether manipulating behavioral factors can influence a website’s ranking in Google.

We had a coupon website at our disposal with almost zero traffic.

About a year ago, it was still ranked in the top 5 on Google and received some traffic. By the time the experiment began, the main keywords were ranked in the top 20-30 on Google.

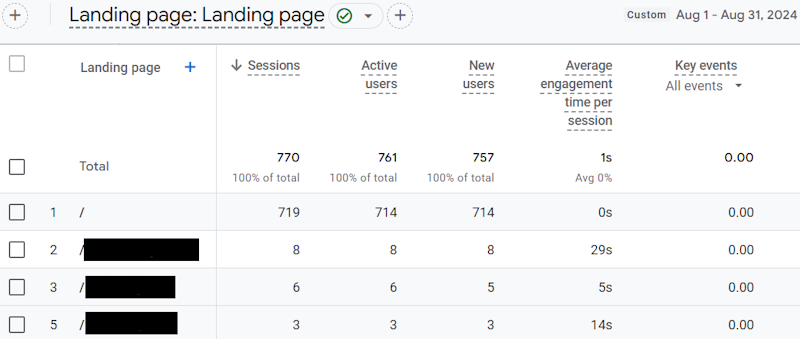

According to Google Analytics, the main traffic the site received consisted of daily bot visits, around 25 per day, to the homepage. The average session duration for bots was zero.

The pages we selected for the experiment had not received any traffic in the past month before the experiment began.

We didn’t use automation software because the goal of the experiment was not to scale the manipulation of behavioral factors but rather to determine whether it still works today.

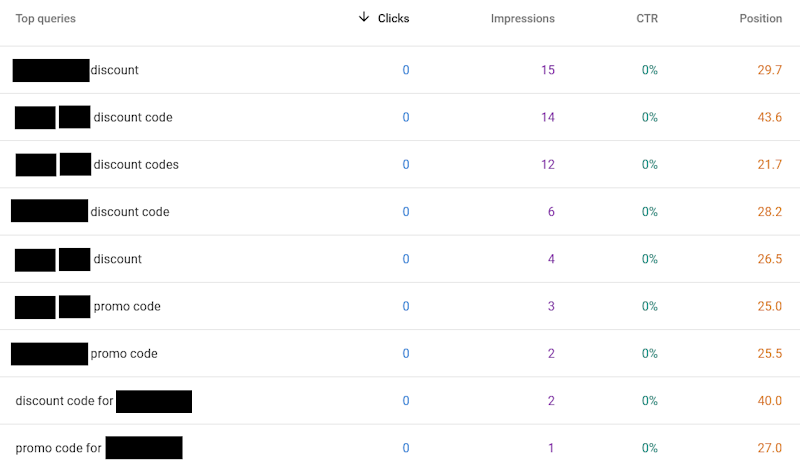

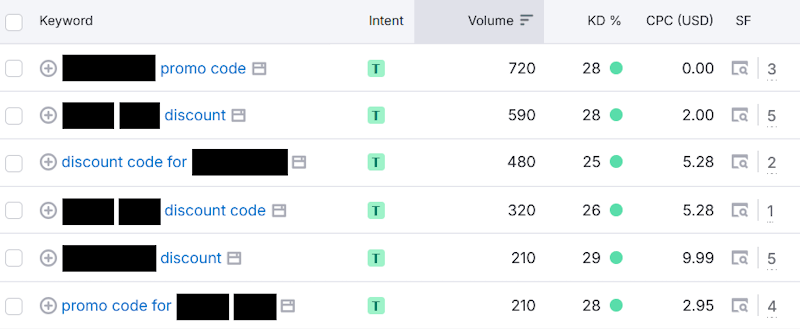

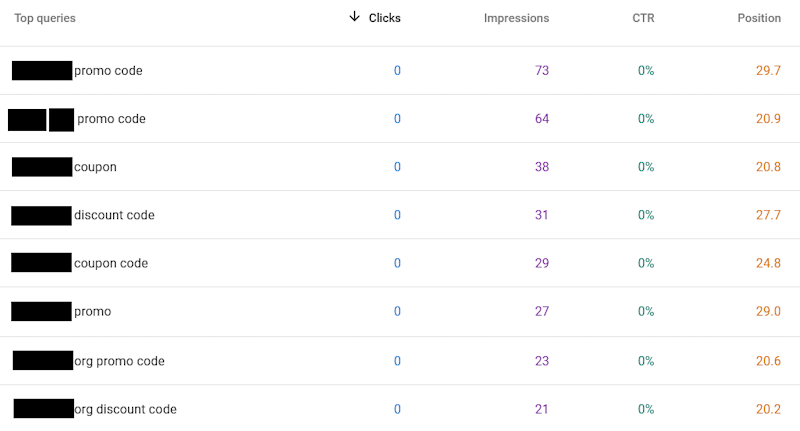

The target pages of the site were discount coupon pages for various companies, optimized for keywords such as:

- [Company Name] + discount

- [Company Name] + promo code

- [Company Name] + coupon code

- and similar search terms.

These were mostly low-volume keywords, so we assumed that 1-3 visits per day to the targeted page would be enough to influence rankings. This approach would be manageable manually without the need for automation software.

Algorithm for Manipulating Behavioral Factors

1. Select queries where the promoted site is already ranking in the top 20-30.

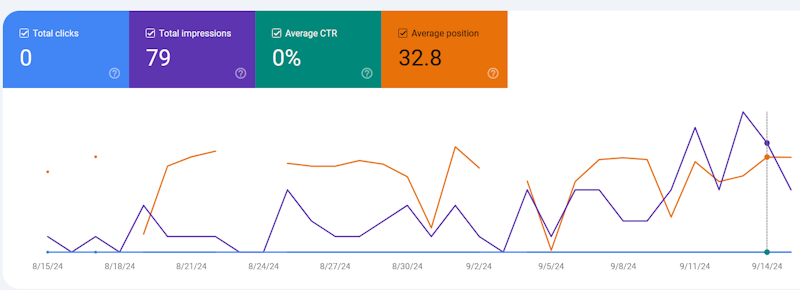

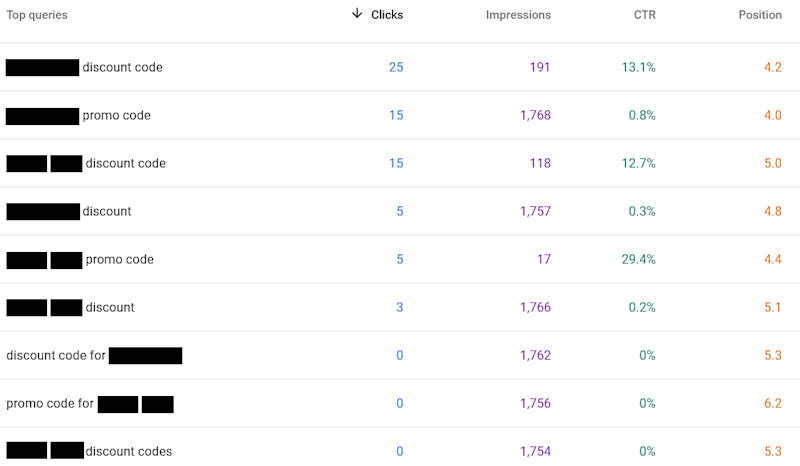

We selected a page that had an average position of 32.8 in Google Search Console at the start of the experiment.

We exported all search queries the page was ranking for into an Excel spreadsheet.

2. Determine the minimum number of visits required to the site.

To calculate the minimum number of daily visits required to the site for the selected queries, it is recommended to use the following formula:

Keyword search volume / 30 * 0.5.

If the keyword search volume is very low, the calculation using the formula above may result in a number less than one. In this case, it’s necessary to make 1-2 visits during the testing period and monitor the results.

In our case, we understood that although keyword analysis tools like Semrush show search volumes of 500 or more per month for certain keywords, most of these figures are inflated by our competitors. The actual search volume for the selected keywords is no more than 100-150 searches per month.

3. Create different user behavior scenarios in search.

At this stage, it is necessary to create a customer profile and, based on it, compile lists of websites and search queries for boosting profiles.

These can include queries related to searching for the latest movies, music, products, news, recipes, nearby venues, and more.

You can quickly compile lists of search queries for accumulating cookies by extracting keywords from niche websites using Ahrefs or Semrush. Actual search queries can be found in Google Trends, and you can make them more unique using Google Autocomplete suggestions.

4. Create different user behavior scenarios on the website.

Uniqueness is necessary not only for individual pages but also for each visitor.

For example:

- The first visitor entered the target page from search, then navigated to the homepage, and from there to a product/service page.

- The second visitor entered the target page, then went to the contact page, returned to the target page, used the search function, and so on.

- The third visitor entered the target page, performed some actions, scrolled to the bottom, and then left.

We paid the most attention to user behavior on the target page. The average time spent on the page per session was around 60 seconds.

5. Use warmed-up profiles with unique browser fingerprints.

A unique browser fingerprint profile needs to be created for each client. Antidetect browsers handle these tasks well. For the experiment, we used the AdsPower browser.

Experts recommend using mobile proxies when creating and warming up profiles. Proxy servers are essential for successful behavioral factor manipulation.

We decided to take a different approach, with OpenVPN and 25 servers in different countries at our disposal.

It was decided to create 25 unique profiles, assigning one of the available servers to each of them.

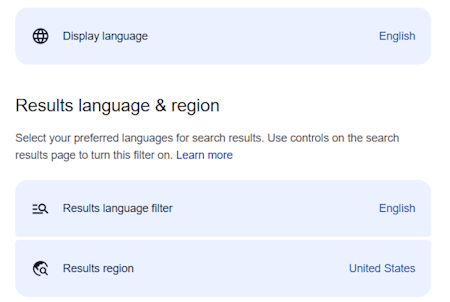

It is important to note that the main target audience of the promoted website is located in the USA.

Therefore, if the server did not match the target region, we changed the language and region settings in Google Search.

6. Start the process of manipulating behavioral factors.

During the first week, we focused on accumulating cookies for the created profiles based on the previously established scenarios.

Then, we made daily transitions from Google Search to the website, randomly alternating profiles and search queries.

A month into the process, we noticed positive results and decided to increase the number of profiles by purchasing IPv4 proxies from proxy-seller.com.

Currently, 40 profiles with unique browser fingerprints are in use.

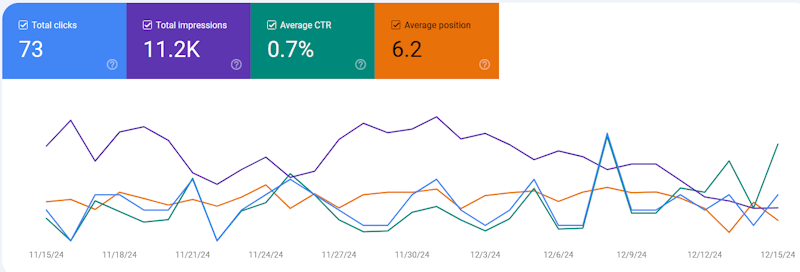

Results, two months later.

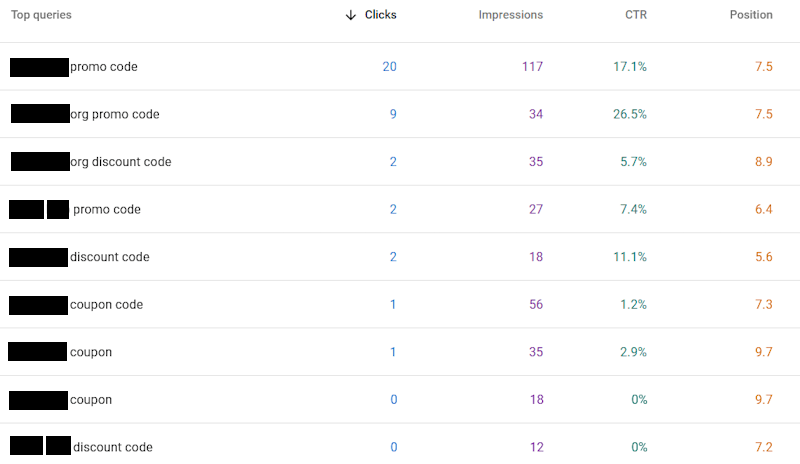

After two months of work, all the main keywords for which the page was intended to rank had entered the top 10 of Google search results.

The average position, according to Google Search Console data, rose to 6.2.

To solidify the achieved result and eliminate the possibility of random outcomes, we began working on improving the behavioral factors for another page.

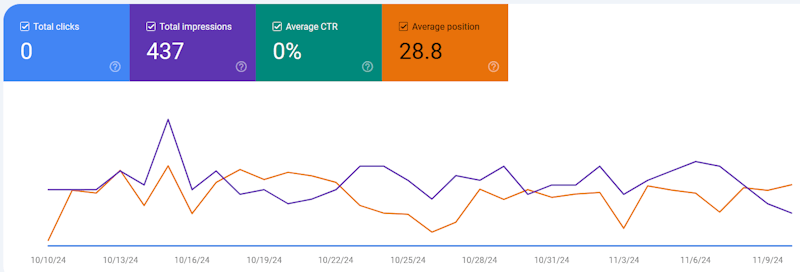

At the start of working on the second page, its average position, according to Google Search Console data, was 28.8.

All the search queries for which the second test page ranked on Google were also exported to Excel.

Now, we made daily transitions from search results, not to one, but to two selected pages, alternating profiles and search queries randomly.

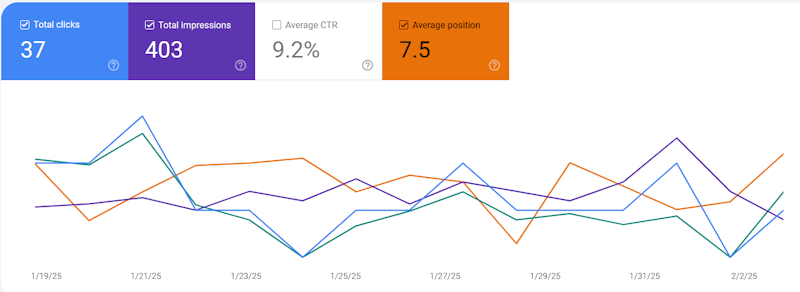

Results, four months later.

As in the first case, after two months of work, all the main keywords for which the second page was intended to rank entered the top 10.

The average position, according to Google Search Console data, rose to 7.5.

The first page also maintained a positive trend. For some search queries, positions improved by 1, while others remained the same. Currently, no decline in rankings has been observed.

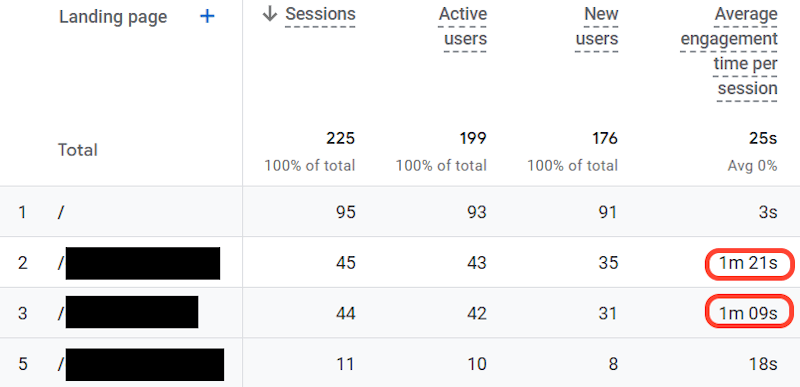

Data from Google Analytics for the last 2 weeks:

The screenshot shows that the average time spent by a user on the promoted pages is now over a minute. Approximately 20% of the visits to the site are from real users.

We set ourselves the goal of ranking the promoted pages in the first position on Google in the coming months, as the majority of traffic goes there.

The number of profiles will be increased to 50, and we also plan to raise the number of daily visits from search results to the promoted pages to 3-5.

When is manipulating behavioral factors necessary?

Manipulating behavioral factors becomes relevant in the following situations:

- Maximum optimization has been reached. All possible measures to improve the website have been implemented, but position growth has stalled.

- High competition. In niches where nearly all competitors use manipulation to maintain their positions, staying competitive without this method becomes challenging.

- Short-term goals. When it’s necessary to overcome a specific ranking threshold or achieve particular metrics for a given campaign.

What conclusions did we draw?

Positions improved only for the pages where behavioral factors were manipulated, while the positions of other pages on the site remained virtually unchanged.

It can be confidently stated that manipulating behavioral factors works, but it should not be the foundation of your SEO strategy from the start.

Ensure your website meets Google’s quality criteria, such as E-E-A-T, and provides practical value to the end user.

If you feel you’ve tried everything, you might consider manipulating behavioral factors. However, proceed with caution, as search algorithms are constantly evolving.

While Google may raise your site’s ranking today, there’s no guarantee that your site won’t face penalties tomorrow for attempts to artificially manipulate these factors.

Comment (1)

Great case study. I’m working on SEO for a site focused on natural healing, and it’s always helpful to see real examples like this.